1 min to read

#100DaysOfNLP Day 3: Dependency Parsing

This blog post will detail the events of my 3rd Day on the #100DaysOfNLP journey, but it’s uploaded on the 5th day! I was caught up with schoolwork on Monday and Tuesday and couldn’t upload then. Anyways, the subject at hand is dependency parsing, which is an important part of Natural Language Processing. Lecture number five of Stanford’s cs224n course covers all the content you need to know about Dependency Parsing and I’ll aim to summarize the topic in this short blog post.

What is Dependency Parsing?

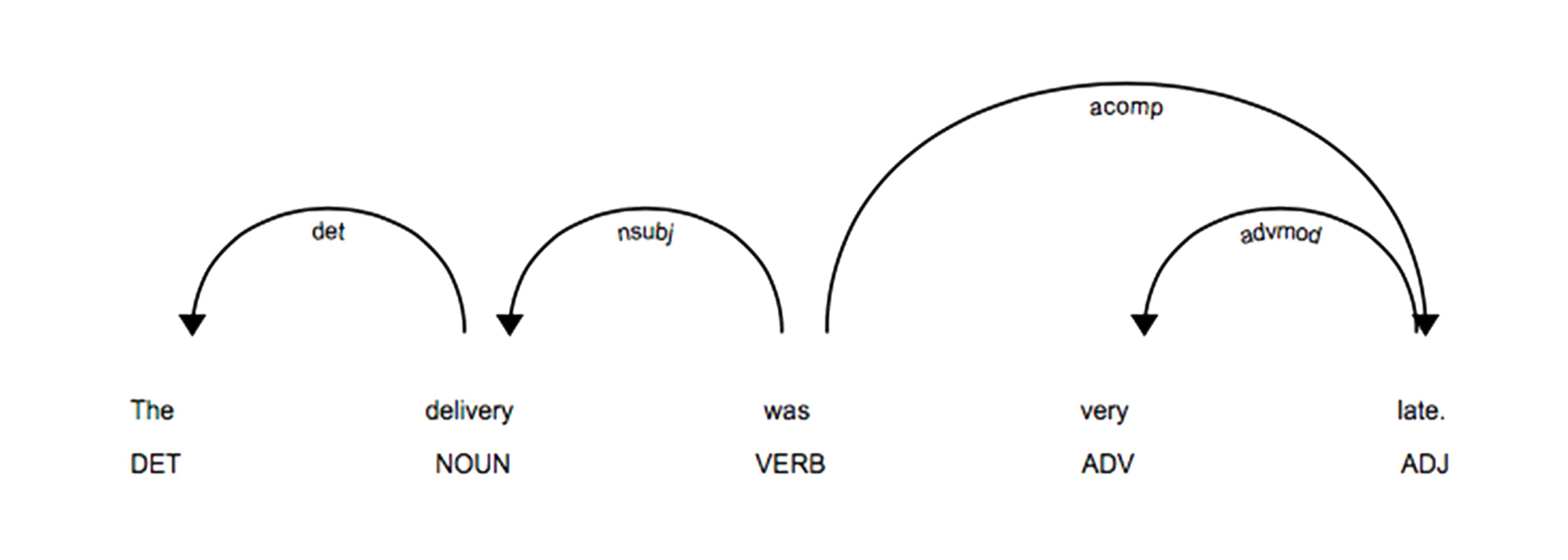

Dependency parsing is the process of obtaining the grammatical structure of a sentence and defines the relationships between certain “head” words and the other words in the sentence. Here’s an example of sentence that was dependency parsed:

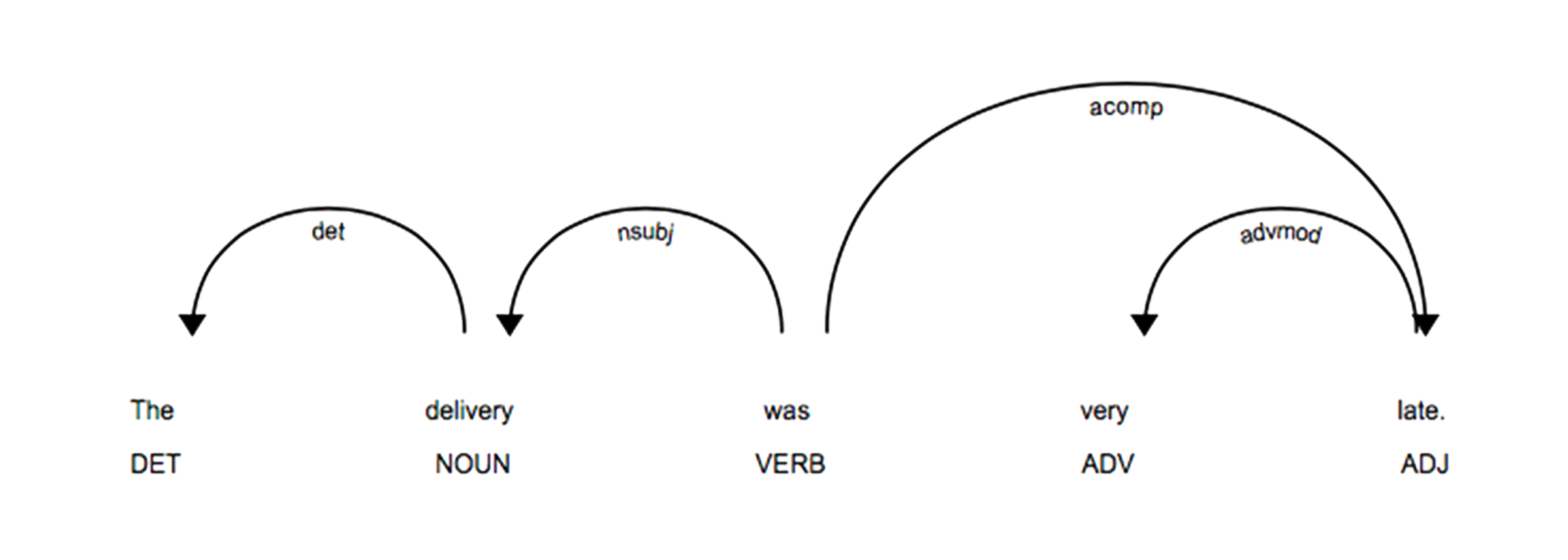

As you can see, arrows are drawn from words that depend on other words. At a glance it isn’t something incredibly complex, but when you start to think about it, you realize just how complex it is. Sentences in the english language tend to be ambiguous and hard for humans themselves to understand properly, so getting a computer to properly parse them is a hard task. For example take the following phrase: “Scientists study whales from space”. This could mean that scientists are studying whales who are aliens or they are studying whales in the ocean with tools that are in space. Here’s what the parsed version of that sentence looks like in both contexts:

(This example is taken directly from the cs224n course taught at Stanford)

So as you can see, the scientists are either studying from space, or the whales are from space. It’s important to be able to resolve ambiguity in statements. Now in no way at all was this a tutorial on dependency parsing, it was just me highlighting what I learned today. If you want to learn more, check out cs224n by Stanford. Also be sure to check out my email list if you want to continue on the #100DaysOfNLP journey.

Comments