2 min to read

#100DaysOfNLP Day 1: Word Vectors

I’ve been interested in Machine Learning for a while now, as evidenced by my previous blog posts, and I have been wanting to get into Natural Language Processing for a while now. So I’ve decided to take advantage of my summer vacation and embark on 100 days of NLP. My journey will include working on the cs224n course put out by Stanford and a mentorship with Dr. Shashank Srivastava at UNC Chapel Hill under who I will pursue my own research. Each blog post will detail what I’ve done on one day of my NLP journey, and hopefully it’ll serve to inspire others to get on the #100DaysOfNLP train.

As you might have guessed from the title, this post is about word vectors which happens to be the subject of the first two lectures of cs224n. But before we get to that, we’ll need to talk about what Natural Language Processing is.

What is Natural Language Processing?

Natural Language Processing is a subfield of Artificial Intelligence that focuses on getting computers to understand human language. It’s a field that is extremely interesting to me, and I can’t wait to continue on my journey!

What are Word Vectors?

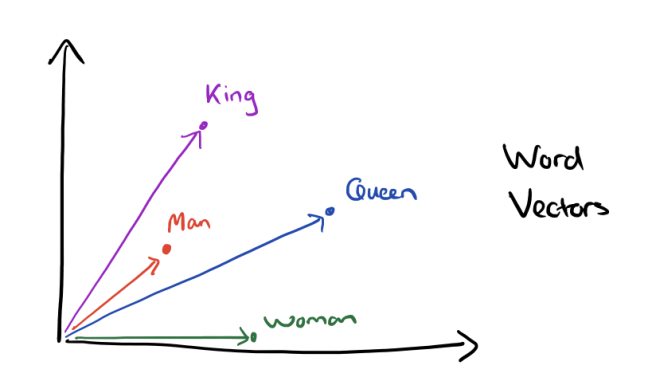

Word vectors are some of the building blocks of NLP and they are the first things that the cs224n course covers. They’re important because they’re how we decide to represent words in NLP. Each word token in the English language is represented as a word vector and they are all put into some sort of “word” space. There are many different types of word vectors, the most simple of which being the one-hot vector. This isn’t meant to be a tutorial on Word Vectors, so I won’t describe each of the types.

What is Next?

The next two lectures in the course cover Neural Networks and Backpropagation, both of which I have a fair amount of experience in, so I hope that those lectures will be easy to comprehend for me. After that, I’ll move on to more in depth NLP topics and review those. My research will begin on June 15th and blog posts from that point on will include everything I learned that day and my progress on my project. Until next time!

Comments